Innovate with Google Cloud

Unlock the Potential of Your Business with Our Google Cloud Expertise

Unlock the Potential of Your Business with Our Google Cloud Expertise

Whether your business is early in its journey or well on its way to digital transformation, Google Cloud can help solve your toughest challenges.

Subscribe

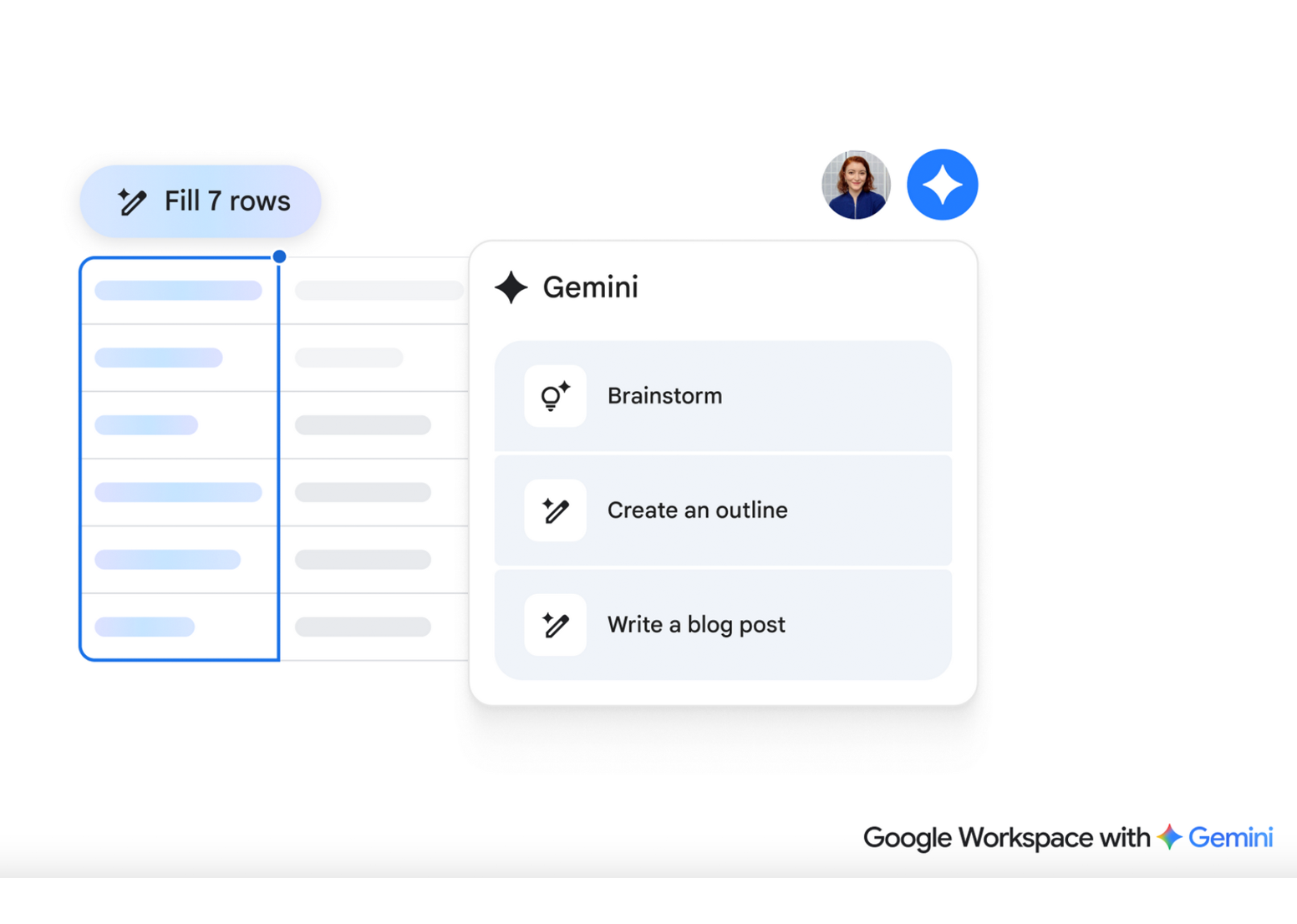

Create, connect, and collaborate with the power of AI. Home to the apps more than 3 billion users know and love — Gmail, Drive, Meet, and more.

Subscribe

Make the world your platform. Build with maps in over 250 countries and territories, powered by data updated 100 million times daily.

Subscribe

With Cloud Ace as your trusted partner, your IT team gains the confidence and skills needed to fully leverage Google Cloud technologies. Whether you're starting your cloud journey or optimizing existing infrastructure, we ensure a smooth and efficient transition.

Contact us

Cloud Ace is a leading cloud solutions provider specializing in Google Cloud technologies. As a Google Cloud Premier Partner, we offer expert guidance, implementation, and management services to help businesses leverage the full potential of cloud computing.

Contact us

Gold Support signifies a significant upgrade from entry-level tiers. It's built for businesses that can't afford significant disruptions and require proactive support, rapid response times, and access to highly skilled engineers. It's about having a trusted partner in your cloud journey.

Learn more

Managed Service beyond traditional support by providing proactive and comprehensive management of your cloud environment. It's like having a team of cloud experts working behind the scenes to ensure your cloud infrastructure is running smoothly, securely, and efficiently.

Learn more

Bronze Support is the entry-level customer support tier for cloud services, designed for businesses with basic cloud needs and limited technical expertise, providing essential assistance to get started and address common challenges.

Learn more

Excellence

Latest news & update

14 November 2025

11 September 2025

10 September 2025

04 September 2025

Testimonial